NO EXECUTE!

(c) 2008 by Darek Mihocka, founder, Emulators.com.

Happy Canada Day! July 1 2008

Ba-jing! Too Much, Too Fast?

Two weeks ago I travelled to Beijing for the ISCA 2008 conference. For me the most interesting aspect of the trip was not the conference itself but rather the miles of walking I did around the city that will be hosting the Olympics in just a few short weeks. I'd never been to mainland China before and so my mental image of China before the trip was based on those horrific images of the 1989 Beijing Massacre of course, but also the stories of sweat shops producing tainted products, the recent and ongoing riots in Tibet, the flee of citizens from Hong Kong prior to its handover from Britain, the news stories of massive death tolls in coal mines and of entire villages destroyed by earthquakes. I very much expected a communist police state and to have my papers checked at every corner. For that reason I packed very minimally, bringing a throwaway Dell laptop instead of my good Apple Macbook, a throwaway camera instead of my good Sony, and the bare minimum of other travel accessories.

The good news is that I was far too pessimistic and paranoid. I was pleasantly surprised to find a city rapidly hurtling itself into the 21st century. China, or at least Beijing, has cast aside its communist past and embraced the whoredom of capitalism with the fervor of an American soccer mom waiting in line at a post-Thanksgiving sale. I have been to cities like Tokyo and Singapore before and Beijing struck me very much as an emerging version of those cities; the latest entry into the list of the world's cities trying to modernize and embrace the Western way of life. The stories you read about of "China, Inc." and of "Two Chinas" seem quite accurate. Imagine a Blade Runner world of high technology and filth, rich and poor, of large skyscrapers and narrow densely packed alleys. There is a bit of everything all mixed together, yet unlike a large American city, everyone was always polite and I never felt unsafe or unwelcome.

The bad news: China now apparently has a new middle class of 300 million people, as large as the population of the United States, yet that still leaves about a billion peasants living in poverty. The middle class is embracing all things Western - Audi automobiles, Starbucks, designer clothing, and yes, even Mexican restaurants - while slaves toil away building the next bigger and better skyscraper. I can't say that this is really a good thing for either class. Is American consumerism really the ideal that the rest of the world strives for? Or is this merely a show being on for the world in preparation for the Olympics?

Being an idiot, I booked my hotel online without consulting a map of Beijing first. And thus of course I managed to book a hotel about as far away from the Olympic Village venue of the ISCA conference as possible. I ended up staying along Jianguo Road, the main east-west street through Beijing that runs along subway line 1 and passes such landmarks as Tiananmen Square, the Forbidden City, and the Silk Street market. This forced me to either take the subway or taxi every morning the 10 miles to the conference venue, or as I did on some days, take long walks along this main stretch of the city. It felt like walking the Las Vegas strip, except if the Las Vegas strip was simultaneously all under construction at once. The transformation that is taking place is just awesome.

Beijing is choked in smog; unbelievably "foggy" due to the massive amounts of air pollution. When my plane landed late at night, it looked like the kind of fog we get here in Seattle most winter nights. The drive to the hotel revealed little about the city as visibility was minimal. Even the taxi driver missed my hotel and had to loop around because one couldn't read the sign or see the lobby of the hotel until you were literally driving right in front of it. The conference workshops didn't start for another couple of days so the next day was purely tourist mode. I took this photo from my hotel room the next morning and this was pretty much the view from my hotel for the next 6 days. This is not fog, this is not rain. This is the air pollution that hovers over the city every day. For six days I did not see the sun, the moon, stars, or blue sky. At best in the middle of the day you could stare directly up at the sky and see the outline of the sun through a grey haze.

Taking the subway three stops to Tiananmen Square was refreshing. The city above ground is hot, humid, and malodorous. Inside the subway, the air is cool and fresh. The subway is very cheap (about 2 yuan, or about 30 U.S. cents) and very efficient. It is the best way to get around the city. But beware the unfinished subway stations. The tourist maps already have the new subway lines to the Olympic Village drawn in, but as I found out, as of June 25 they are still not opened. I spent one hour that day walking around in circles at one subway station which the map indicated was the transfer station to Line 10, but Line 10 doesn't exist yet. Around the city you will see gated subway entrances to as yet unopened subway lines.

Taxis are an inexpensive alternative to the subway. Compared to the outrageous rates here in the U.S., a taxi drive in Beijing costs about 1/5th as much. The 20 or so mile drive from the airport to my hotel worked out to 70 yuan, or about 11 dollars. Try that in Seattle or New York. A 15 minute drive home from Sea-Tac airport costs me 40 dollars, whereas in Beijing even the 30 minutes in rush hour from my hotel to the Olympic Village was only a 40 yuan drive, 6 dollars! The trouble with the taxis that I found is that every single taxi driver I had seemed illiterate, or at least unable to read a map. I made sure to have a map of Beijing in Chinese so that I could point to my hotel or point to the Olympic Village and the driver could read what I was pointing at. Surprisingly, most of the time the driver then had to either stop to ask a policeman (and there are plenty of those on every street corner, in that respect it is a police state). Which makes me wonder, how recently did these people become taxi drivers and how well did they know the city? 7 million people are expected to descend on Beijing in just a month and nobody seems to know how to get around!

Walking north toward the Olympic Village, the area known as the CBD looks like a giant ghost town under construction. Literally entirely miles of the city are being rebuilt to look like New York or San Jose. Western looking shopping malls with names like "The Place" and condo complexes such as "SOHO" are springing up but not quite open. Unlike Tokyo, this new version of Beijing features very wide boulevards, large and unique skyscrapers, and malls with just about every American chain you can think of. From garbage junk food like McDonalds, KFC, and TGIF, to the obligatory Wal Mart (yes, and the prices were obscenely low on just about everything) to about every upscale retail outlet that I can find in downtown Seattle. But far more sprawled out than what I've seen in Japan or typically here in the States.

The architecture of the new buildings impressed me the most. These are not cookie cutter developments as some of the eye sore garbage that is popping up all over North America. Almost each and every building is unique in some way. Curved tops, curved sides, holes in the middle, shapes I couldn't even imagine a building could be built in. The most famous is probably the new CCTV headquarters which were being built a couple of blocks from my hotel. When I first saw this building I had to take walk around and make sure I was seeing what I thought I was seeing. A building the goes up, then sideways, then sideways again, then down!

Among all this steel, concrete, and glass, is the slave labour that is building these giant monuments; they're not all working in the toy factories and garment sweat shops. I call them slave labour because one evening when I was walking around past midnight I realized that the construction workers are caged in at these construction sites. They live on-site in rather shoddy looking barracks. Unlike here where they punch a time clock at 5pm and go home with their lunchbox, these guys live and work at these sites around the clock. The work goes on all night as the sparks from welding up high in these buildings could be seen at all hours. Slave labour is alive and well.

I did have to visit the electronics district of course. What a crazy place! Building after building of allegedly "authorized" dealers, selling Apple iPods, Sony cameras, and Microsoft Office. Floor after floor, dealer after dealer, pimping laptops, video cards, flash drives, you name it. As with the Silk Market which sells fake shoes and handbags, the prices for electronics were incredibly arbitrary. Cash is preferred, and several times I saw tourists hand over money only to then have the price disputed further. I myself had to pry bills out of one woman's hand who would not honour the price that she agreed to. Yet at the Silk Street Market fat American soccer moms were packing the market buying all sorts of counterfeit garbage. So much for all that backlash against Wal Mart and defective Chinese goods. When it comes down to it, ugly American tourists have few standards.

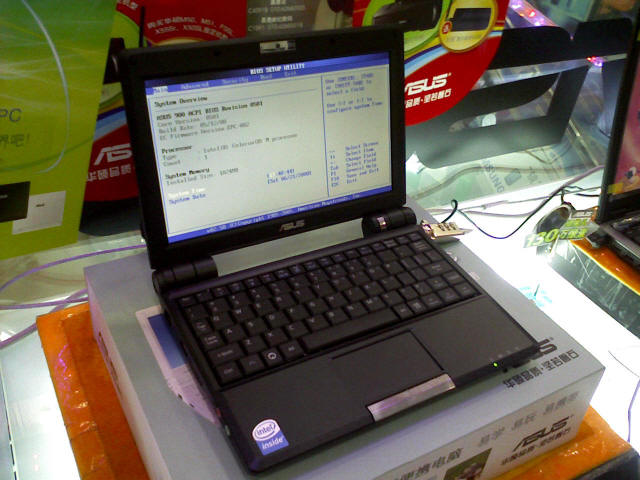

Being one of those ugly tourists, I was hoping to pick up one of the second generation ASUS EEE PC models, which I've read are better suited for running Windows. I did spot one such machine featuring the larger LCD screen (you may notice the speakers are no longer eating up space next to the screen) and upgraded from 512MB to 1GB of memory. Still, with only 4GB of built-in storage as before, and after watching other people getting robbed blind by the shady vendors, I lost my urge to shop and passed.

Be prepared to haggle a lot for everything. Even in restaurants and bars prices seem arbitrary. Sometime I was charged as little as about 3 dollars for a cocktail, and sometimes over 16 dollars, and sometimes whatever paper currency I happened to have in my hand. Sometimes I asked for gin and got vodka, sometimes the bartender wouldn't even know how to mix the drink that was listed on the menu. This whole Western lifestyle has been thrust on the Chinese so quickly that I think it is as much a shock to the locals as to us tourists.

If you are planning to go to Beijing for the Olympics, prepare for a crazy and relatively fun time, unless you have respiratory issues, then be prepared to cough and sneeze as most people around me did quite a lot. The city has a massive amount of work to complete in the next month prior to the start of the Olympics - subway lines to complete, buildings to complete, hotels and shopping malls to open, taxi drivers to educate, and an unimaginable amount of pollution to clean up. It is making phenomenal progress to put on a good show. The monetary investment alone to fund this transformation must be staggering. Given where China was even 19 years ago, I wonder if this is all for show for the Olympics, or if this growth and progress will sustain itself once the ceremonies are over. We'll see. I myself will be sitting at home in Seattle in August and watching it all in high definition. Six days was plenty for me. Hope the athletes make it a full two weeks.

Some other fascinating sights around Beijing. Click to enlarge thumbnails.

ISCA 2008 - The "What If" Conference My primary purpose of going to Beijing was to present a paper at one of the workshops at ISCA 2008, the 35th annual gathering of geeks at what is called the International Symposium on Computer Architecture at the Crowne Plaza hotel right next to the Olympic stadium. A lot of familiar faces attended the cocktail reception on the Sunday evening, pictured here, but the real work already began the day before on Saturday morning June 21. It is that morning that Stanislav Shwartsman and I presented our paper on Virtualization Without Direct Execution (paper, slides) which years from now computer textbooks will look back upon as the paper that changed computing. Ok, probably not, as we didn't really say anything that wasn't said two decades ago or that I haven't ranted about in this blog over the past year so I won't bore you with the details, just read it. The good news is that our paper was well received. I had feared that our paper was somewhat out of place, or worse, I'd get the kind of feedback I've gotten from certain Intel, Microsoft, and VMware employees over the past couple of years in my argument against hardware based virtualization. I was relieved to find out that the themes at the conference were very much emulation and binary translation friendly, even surprising so given the huge Intel turnout. Intel started the conference off with two keynotes speeches by recent Intel hire Dave Ditzel. You may not know his name but you certainly know the company he founded in the 1990's: Transmeta. Dave is now a vice president at Intel in charge of Hybrid Parallel Computing, a sign that Intel very well may be seeing the light as far as what I've been saying about eliminating unnecessary hardware from the microprocessor and doing the work in software. I thoroughly enjoyed Dave's two keynotes as he described Transmeta's techniques and philosophies, as it made clear to me that Transmeta "got it" back in the 1990's as AMD and Intel were busy stupidly fighting over clock speeds and cache sizes. On June 23, the first day of the main conference, the keynote address was given by another Intel executive, their Chief Technology Officer Justin Rattner, entitled "Microarchitecture is Dead". His keynote may similarly signal a shift at Intel, as he pointed out that for all the work on creating low power CPUs, the difference in overall power consumption between an idle CPU and a busy CPU varies from 3% to 8% of a typical device. The point being that all the focus on minute details of CPU architecture to squeeze a percent here or a percent there is moot given that power consumption involves a much broader scope of issues. He discussed the long time that it takes to redesign a CPU and bring it to market - something like 7 years of planning and design in total to then actually have the device in production for only 1 or 2 years. Wow! Even I was off the mark there, in one of my old Pentium 4 rants I said that it takes 5 years to bring a chip to production. I was being too optimistic. This means that the Core 2 processors that are on the market today really began line in about 2001, not surprising, as that would be shortly after the backlash against the Pentium 4 started. But it also means that engineers at Intel today are already working on the chips that won't hit store shelves until the year 2015. My solution the problem of course is simplify the chip. Strip out things that aren't absolutely needed and do them in software. That's the Transmeta philosophy, and surprise, it is now being experimented with at Intel already. The new "Nehalem" Core 2 chips coming out in about 6 months apparently implement the new SSE 4.2 instructions in some kind of new microcode which controls what he called RLUs or Reconfigurable Logic Units. Lacking a more detailed explanation, this sounds somewhat like what is done to program FPGAs. Justin called this "Late Binding of Instructions", which allows Intel to add new x86 instructions to a processor in just 6 months instead of 7 years, and extend the instruction set much sooner. Not being a fan of the recent explosion of SSE instructions, I really hope this does not result in another barrage of SSE instructions. What I do hope this means is that future Intel processors will implement more of the x86 instruction set in such reconfigurable logic and shift to the Transmeta of completely decoupling the x86 instruction set from the underlying hardware. At that point you're basically doing emulation, my favourite topic!!! On June 24, there was a keynote address from Chief Scientist at nVidia, David B. Kirk, entitled "Top 10 Problems". He really listed about a dozen major issues which I will summarize here, some of which should be glaringly obvious to most software and hardware engineers: Interestingly David's solution to these problems was to mention nVidia's CUDA at just about every slide, pointing out that there are 70 million CUDA capable nVidia video cards out there. I have to disagree with David there on the viability of this, for while nVidia may have a lot of many-core GPU based video cards out there, what nVidia is really pushing is high priced video cards that only a small fraction of computers users have or can ever afford. I don't buy $300 video cards, and I certainly don't think that the next billion PC users in emerging countries will do so either. So while I mostly agree with what was said at these three keynotes, I strongly believe in still researching techniques to take advantage of the capabilities of the existing billion PCs out there, not just hoping that people buy new hardware. nVidia is taking the view of VMware or Microsoft, that people will just blindly buy the latest and greatest hardware, and that's not how to sweep existing problems under the rug. To the conference itself, there was exactly such a paper about designing hardware to hide bugs. Seriously! The paper, which originated here from Seattle from the University of Washington and from AMD, was funded by Microsoft. Yes, what better way to make buggy Microsoft software run better than by paying people to develop hardware that hides the bugs. Gaaaaaaaaah! Of the dozens of papers presented at ISCA, this is the one paper that I am skeptical about. Titled Atom-Aid, it claims to hide "up to 100%" of what they call "atomicity violations", or as real people call them, race conditions. Put simply, an atomicity violation is the situation where one executing thread is in the middle of updating some shared memory location and another thread then unexpectedly updates the location as well, leading to incorrect program behaviour. This is a very common problem and so trying to detect and eliminate such race conditions or atomicity violations is desired. But to have the hardware to it, no way. It's Microsoft responsibility, and the responsibility of any software developer, to find such bugs up front and not ship them in the first place. What is a better line of attack is to give software developers the tools to find such problems before the code is released. And the Atom-Aid people did do this to prove their point. They in fact used Intel's PIN instrumentation framework (AMD, using Intel's tools, ha!) to show that such bugs do exist today in common software - in MySQL, in Java, in Apache, etc. Strangely, the Microsoft funded project apparently didn't look into Windows related bugs, hmmmm. What the basic premise of their scheme states that if you have window, say, 1000 instructions, or up to 8000 instructions in size and can analyze the data dependency within this window, you can find atomic blocks such as the read and update of some shared memory location. This type of analysis, I agree with, and I truly wish each and every software developer on the planet had access to such tools and used such tools on every line of code written. But what I disagree with is their claim, that by having the hardware implement such a window and commit such blocks of instructions atomically, that (their words) you can hide "more than 99% of atomicity violation instances in virtually all workloads". I don't buy this claim. First of all, some atomicity violations can span millions of instructions. So at what point do you cut off your window size and say good enough? And knowing how slow PIN is and that it is anything but a cycle accurate simulator, how can the truly make this claim? PIN only instruments user mode portions of code, and therefore does not catch kernel mode events such as context switches. One can "hide 99% of atomicity violations" today very simply - run your multi-threaded program on a single core CPU or with affinity set to a single CPU core. That alone will hide many race conditions. This is why so many race conditions go unnoticed, because it has not been until the past two or three years that the average PC or Mac user is even running a multi-core system. But a simple context switch, perhaps a page fault or a timer interrupt, can easily drag out a race condition out to millions of clock cycles. Simply hiding more of the bugs and extending the mean time to failure of a piece of software does not solve the fundamental bug in the code itself, and that's why I am so offended by this paper. Giving better tools to developers is far better than to give computers users a false sense of security or reducing Microsoft's product support calls. On the topic of tools, there were several papers tackling an issue of great interest to me, how to efficiently record a long trace of program execution and then deterministically play it back for the purposes of debugging or bug analysis. This area of research I am very much for and a few years when I was at Microsoft Research I worked on a project called Nirvana which, similar to Intel's PIN and DynamoRIO, allowed one to instrument and trace and play back the user mode portion of a multi-threaded program. Useful but not the full solution, as what is really needed on today's complex operating systems is full-system multi-core trace and playback. VMware has made recent claims to supporting such a feature in VMware Workstation, but if you read the fine print, they weasel out of the issue by only supporting a single-core virtual machine. True multi-core trace and playback is not available to the public from VMware or from Microsoft today, and that's where these research papers are going. One such paper, entitled "Rerun: Exploiting Episodes for Lightweight Memory Race Recoding" and another one entitled "DeLorean: Recording and Deterministically Replaying Shared-Memory Multiprocessor Execution Efficiently" (whew!) both strive to find efficient hardware-assisted techniques of recording the instruction stream and memory accesses of multi-threaded code and playing it back in exactly the same order. Both papers point out that there are "chunks" or "episodes" as they call them of runs of instructions which do not conflict with other threads. By detecting the boundaries of such chunks/episodes and time stamping them, you can now deterministically replay the chunks/episodes at a later time for the purposes of reproducing a bug or detecting race conditions. What I like about these two similar schemes is that the techniques can be implemented purely in software (this has already been proven to some extent with PIN and Nirvana) and generate fairly compact traces, one the order of a few bits per instruction on average. This is far superior to brute force schemes such as single stepping the processor and dumping the state at each instruction, which can generate gigabytes of traces for a second worth of execution. More of this kind of research needs to go on, specifically with improving the performance of such tracing. Naturally, there was a paper on this very topic from Intel Research titled "Flexible Hardware Acceleration for Instruction-Grain Program Monitoring". Intel's paper deals with the topic of adding simple functionality to the hardware to allow instrumentation tools such as PIN to run faster. Intel's paper, and several other papers (including our paper) analyzes what the causes of slowdowns are for instrumentation. i.e. what is it about PIN instrumenting instructions that causes most of the slowdown. This is very similar to general topic of emulation and binary translation and the slowdowns incurred in virtual machines, and so it is not surprising that Intel's paper proposes almost the identical instruction as Stanislav and I did for quickly mapping and keeping track of guest memory addresses. In PIN's case, you want to keep track of traced memory addresses and be able to store some additional information about those memory accesses, what they call "metadata". In our case with Bochs and Gemulator, we need a fast mechanism to map a guest memory address to a host memory address. Intel calls their mapping scheme a "metadata TLB", while our paper calls it a "software TLB". Intel proposes a new x86 instruction called LMA (Load Metadata Address) while we proposed one called HASHLU (Hash Lookup). Different nomenclature, but one and the same concept. And that's terrific, because basically you have two completely separate research efforts coming to exactly the same proposal. In our paper the potential speedups of such an instruction are estimated, while Intel went a bit further and actually simulated the acceleration, which they found could reduce the overhead of PIN's tracing to under 2x slowdown. Again, PIN or Nirvana style tracing is very similar to just plain emulation x86 for the purposes of a virtual machine such as Bochs, and so if Intel's calculations are correct, it means that one could implement a virtual machine using this one extra instruction at about the same performance level as the brute force hardware VT based implementations of VMware and Microsoft's Virtual PC/Server today. And thus validating what I've known all along, that hardware VT is unnecessary and should be eliminated from the CPU entirely to save die space. One more related paper I'll mention from the Parallel Processing Institute in China titled "Practical and Efficient Information Flow Tracking Using Speculative Hardware" combines all these concepts to tackle the problem of tracing a program and analyzing it for attacks and defects as it is running. This is something Microsoft Research has looked into as well in the form of the Vigilante project, and in some ways would be the holy grail of reliability and security - to detect errors and virus attacks on the fly with minimal runtime overhead. Unfortunately as this paper points out, even in the single-threaded case the slowdown today stands at 2x, 5x, even 27x depending on the scenario. This topic I believe is a vital area of future research. Tracing and playback, race detection, and virus detection should be a first class design goal of both hardware and software. And not just so you can hide the issue from the user but expose it as soon after it happens as possible, and hopefully leading to a future where such issues are caught and eliminated during development and never even make it to the customer's machine. A fascinating paper on Intra-Disk Parallelism from the University of Virginia proposed that mainstream consumer hard disk drives revert back to what they call "Intra-Disk Parallelism" - actually having multiple physical heads and actuator arms, the "heads" in the Tracks/Cylinders/Heads specification of a drive. Today, cheap consumer drives really only have one head despite what the BIOS setting might say, and to decrease seek time most people simply set up a cheap striped RAID-0 array. The authors argue that an IDP drive would consume less power overall than a RAID array while giving similar performance. Some interesting facts I wasn't aware of: that power consumption of a hard disk is proportional to the fifth power of the platter size, and cube of the rotational speed. Thus the lower you can make the latency the lower you need to spin the platter, and the less power is consumed. And multiple small platters is better than one large platter. Of course the paper points out the obvious, that flash drives will likely make mechanical hard drives obsolete in about 10 years, so in some ways this is similar to a race to perfect typewriters. Overall, a large number of topics were covered at ISCA which are far beyond my understanding or the scope of this blog. Of the papers that my puny brain did understand relating to code performance and analysis, I noticed a few issues popping up over and over again. It's hard enough analyzing a single-threaded system, let alone the 100-core systems that Intel is promising to put on our desks in just a few years. And so the first issue is with tools. Just about every single paper I just mentioned used completely different tools and different CPU instruction sets for their tracing and analysis. One paper of course used PIN to analyze x86 code, which is the instruction set that runs on most home desktop computers. But other papers analyzed Alpha code, or Itanium code, or SPARC code, or in my case, 68040 Macintosh code. Pick up just about any arbitrary computer science research paper and you'll see that the code being analyzed is not common Windows code or even x86 code. It's RISC code running on Unix and generally benchmarking the usual lineup of SPEC2000 benchmarks. I HATE SPEC BENCHMARKS! A handful of contrived benchmarks written for mostly single-core systems that primarily measure clock speed are not indicative of real-world code. SPECJbb2000 for example is well known to be nothing more than a memory bandwidth test, since the multi-threaded tests that it performs are what is called "embarrassingly parallel". So it bothers me a lot when I read paper after paper that just give results for 1990's instruction sets running bogus benchmarks. I'm just as guilty of that as everyone else, since my analysis is partly based on 1998-era 68040 Apple Macintosh code, but I'll argue that's as valid as 1990's RISC code. Therefore the problem is, we might all be gathering obsolete data! RISC processors and older processors such as the 68040 are easier to model, simulate, and study, but they're 1990's technology. What the desktop industry needs - both the chip designers and the software developers - is analysis on real-world 2008-era code traced or simulated on 2008-era hardware such as Core 2. This isn't happening from what I can see and that's a serious problem, because otherwise you get stale research data that leads to the development of costly mistakes such as the Intel Pentium 4. Intel bet the farm a decade ago on high-clock-speed deep-pipeline architectures such as Netburst (used in Pentium 4 and its variants), and that was based on research from the 1990's that, well, maybe wasn't measuring real-world code or anticipating what real world code in the 2000's would be. As David Kirk reminded everyone, "hardware is software". This issue could have been resolved years ago if people took it seriously sooner. I ran across this 1990 keynote speech called The End Of Architecture by legendary computer designer Burton Smith urging the industry to take action on researching parallel computing now (i.e. 1990) or else face the end of the microprocessor. Does his title sound familiar to one of the 2008 keynote titles? Burton gave this keynote at the ISCA conference eighteen years ago and stated back then that: "the microprocessors of today are as ill-suited to general purpose parallelism as the dinosaurs they are preying on" 1990, keep that in mind, because he went on to say: "What architectural shortcoming is preventing their use in general purpose parallel machines? The answer is straightforward: inability to tolerate unpredictable fine-grain latency from any source, notably from the use of shared memory or from fine-grain synchronization." And yet what have AMD and Intel done for the past 18 years? Introduced deeper pipelines, longer latencies to synchronization operations such as CMPXCHG, longer latencies to cache misses, longer latencies to context switches, and most recently the ridiculously long latencies caused by hardware virtualization. In 1990 most of these latencies were in the tens of cycles; today they are in the hundreds or even thousands of cycles. So in addition to our Virtualization Without Direct Execution paper which describes several software techniques to avoiding such costly latencies, it is should be no surprise then that eighteen years after Burton's keynote that the papers and keynote addresses at ISCA 2008 still touched on this very issue of reducing latencies and minimizing the granularity of atomic operations by making frequent references to Amhdal's Law. Multi-threaded multi-core scalability doesn't happen without very fine granularity and low latencies. Academia and industry "get" what the problem is, but after 18 years, are they finally going to act on it or just keep writing more papers based on possibly irrelevant data? Microsoft and VMware were surprisingly under-represented at ISCA. While Intel hosted workshops, gave keynotes, and wrote papers, Microsoft had their logo up and VMware had a stack of Mountain View recruiting flyers at the registration desk. Perhaps it is not surprising that one of the keynote speakers razzed Microsoft for taking five years at a time between OS releases, and suggested that the research community focus on studying Linux and gcc. Now that Bill is gone, is Microsoft asleep? Both Microsoft and VMware need to step up to the plate and participate more in architecture research. Show up at conferences. Publish papers. Open your code. Participate in meaningful ways beyond just thinking about rolling around in money. Otherwise research truly will focus on Linux, gcc, and Xen by default. I plan to attend and hopefully present another paper next year at ISCA 2009 in Austin Texas. Let's hope that the research between now and then changes focus to studying the mainstream architectures on people's desks today.

188 Bottles Of Beer On The Wall... I promised you an announcement in early July and now the time has arrived. Are you ready? Sitting down? Last summer I announced a multi-year effort to develop a new suite of open source emulation tools and virtual machine products. Tools to help developers simulate and debug code, and virtual machines to bring legacy software to more host platforms than ever before. Existing products such Intel's VTune, VMware Fusion, and Microsoft Virtual PC may do the trick today, but as I have ranted for the past year, the very hardware-centric approaches that there products rely on does not scalable to the next billion low-end low-cost PCs that the emerging markets will be using. Nor do they scale to the kind of heavily-multi-core architectures that chip makers are heading toward. As keynotes and papers alike hinted at during the ISCA conference, binary translation is a more sensible, cost-efficient, and scalable approach to the future - both for the implementation of future systems and for analyzing the code in the first place. For me this whole past year has been about research. Bochs, Gemulator, and SoftMac were the existing emulators that served as the Guiney pigs to try out new ideas. Solving the problems of memory mapping, flags simulation, efficient dispatch, memory constraints, etc. All the boring technical stuff that was covered in this blog and in our ISCA workshop paper, that I care about dearly but ultimately end-users of computers could care less about. These issues are important but unless they show up in real products that real users can easily use, then who cares, I may as well be spending my time dreaming up wild theories about Dark Matter. (Here is one: photons have mass, and the missing mass currently attributed to "dark matter" is really the sum of the mass of all of the existing photos in flight throughout the universe. Our sun alone emits 2E14 tons of hidden mass each year which is floating around our galaxy keeping it from flying apart. Nobel Prize please). What the past year and four betas releases of Gemulator and two releases of Bochs have taught me is that there are plenty of clever ways to improve the performance of virtual machines, and to do so in ways that does not require costly new hardware. There are useful products to be developed. I believe that open source and free virtual machines can match anything that VMware or Microsoft do, and as was made abundantly clear at ISCA, serve as a base to also build research tools on. Over ten years ago I learned that I can talk until I'm blue in the face about running Apple Macintosh software on a PC, but until I showed up at Macworld San Francisco in 1998, not too many people believed it could be done or even why they'd care to do it. I faced similar doubt in the 1980's and early 1990's about the ability to run Atari 800 or Atari ST software on a PC until I actually showed up at computer user group swap meets and proved it. Over the past year I've made claims that even Intel engineers have had a hard time believing, and so I intend to prove them. This is a photo of me, eleven years ago in Dallas Texas at a Saturday morning computer swap meet showing off a prototype of what would be the Macintosh emulator. For the next four years I did the same at Macworld, Comdex, CeBIT, Windows World, and other trade shows, until the world had no doubt that legacy Mac software could run on Intel based PCs. You're welcome, Apple. With that all in mind, it is time to put a year of research to rest and move on to the next step of the plan... to write more code, turn betas into products, burn to CD-ROM, and go to the funnest swap meet there is, Macworld! In 188 days, Emulators.com is exhibiting at Macworld San Francisco, January 2009. This will be the ninth exhibit for emulators.com at a Macworld Expo in the past 11 years, and there will be something for Mac users of course, for Windows users, for Atari users as always, and for developers. More details over the next 188 days. Happy Canada Day! Well, was this announcement good for you? Please don't hold back in telling me how you feel. Click on one of the links below and let me have it: