NO EXECUTE! A weekly look at personal computer technology issues.

(c) 2007 by Darek Mihocka, founder, Emulators.com.

November 5 2007

As promised, the Gemulator 2008 Beta 2 release which I had mentioned in the previous installment two weeks ago is now available for download. If you are interested in Atari ST emulation or classic Apple Macintosh emulation, or if you wish to compared it against past releases, you can download the Beta 2 here. Although now quite ready for prime time, Beta 2 is the proof of concept I've been discussing that demonstrates that one can replace hardware-based memory protection techniques in an virtual machine with completely software-based memory translation techniques using software-TLB lookups. This is done to reduce host page faults and generally to even run faster.

So that people have an opportunity to send beta feedback information, I will take a break from working on Gemulator and SoftMac for a while, so do not expect Beta 3 until after the new year. Please do download it if it is applicable to you and send me feedback directly.As a segue to the main topic that has been bugging me this week - irresponsible journalism - I want make a plea to Stephen Coates at the web site Emaculation (http://www.emaculation.com/) to please do a better job in validating and verifying the information posted on his site. For those of you not familiar with it, Emaculation is a web site which has been around for a number of years and tries to pass itself off as a reference site for all matters related to emulating Apple Macintosh. I've had a long standing love-hate relationship with this web site, since I love them for promoting a cause near and dear to my heart - running Mac OS on all sorts of non-Apple host computers - but they do regularly irritate with me their inaccurate posts, and worse, an apparent apathy toward correcting those posts.

Let me give you an example. In mid-August, I posted the Beta 1 releases of Gemulator 2008 and SoftMac 2008. A few days before the formal announcement of their availability, the emulators.com site had some placeholder test builds of these products. Once the Beta 1 releases were posted, I had assumed that sites like Emaculation would let their readers know, and they did. However, if you look at this anonymous posting (http://www.emaculation.com/forum/viewtopic.php?t=5000) it quickly becomes obvious that the posting is misleading. While the site implies that this is an apples-to-apple benchmark of the new Beta 1 against an older release of SoftMac against another Mac emulator known as Basilisk, the anonymous person behind the post actually benchmarking the placeholder test build (dated August 8th), which was neither an official drop nor even a drop of SoftMac, which was actually posted a week later. Let me quickly explain why this matters: Gemulator and SoftMac are built from nearly identical source code, but each product is tweaked slightly such that Gemulator's performance is tuned for Atari ST emulation while SoftMac's performance is tuned for Apple Macintosh emulation. So it thus disappoints me that someone out there would jump the gun and benchmark the wrong test build and almost three months later not update the data with the actual beta release that I had intended to get tested and benchmarked.

It is easy for someone to make a legitimate benchmarking error. But I am specifically disappointed with Mr. Coates for not having the moral or ethical backbone to verify the postings made to his web site. I have exchanged private email with Mr. Coates in the past week and he has indicated that he does not verify the postings to his web site. So you end up having a web site that tries to be the wikipedia of Macintosh emulation, yet does little fact checking and do not remove posts that have such glaring and obvious errors. For someone like me who is obsessive about fact checking, I just think Mr. Coates should take a little responsibility and put more effort into running his web site.

If web sites don't take responsibility over the accuracy of their content, the Internet will end up as nothing more than a collection of useless web sites that exist merely to host banner ads and collect Google click-through revenue. Google wins, consumers lose. Is this why kids today get into engineering and computers? It is certainly not what attracted me to computers in 1980.

There was a time during the 1980's and early 1990's when most people still read print magazines such as the weekly PC Week or InfoWorld, or the monthly PC Magazine, BYTE, or Computer Shopper. Back when Computer Shopper still had an Atari column and their columnist John Nagy used to write about my Atari emulators, that magazine was almost an inch thick. Each month's issue was chock full of information about Atari, Amiga, Mac, DOS, you name it. Computers were still a hobby, still something that appealed to technophiles with engineering backgrounds, and as such the people who wrote about computers actually said intelligent things.

But as I learned first hand doing the Macworld and COMDEX show circuit in the late 1990's, the average writer who passed himself off as a "journalist" for a newspaper or a magazine had devolved to a clueless monkey merely looking for free handouts. I remember countless "journalists" passing by my booths, stopping to ask me why anyone would want to run Mac OS and Windows on the same computer (no, seriously, they were that clued out), which in 2007 should strike anybody with more than a room temperature IQ as a preposterous question. And yet this was the caliber of computer trade show journalist back around 1999 or 2000. That question was usually followed by a request for a free T-shirt, which I generally refused to journalists as their job was to about technology, not be bribed with free handouts. In turn they would generally walk away and go pester some other booth for a free T-shirt instead of actually doing their job and interviewing me about my product. It was a bitter lesson to learn, that the technology I fell in love with in 1980 was now just an industry full of bottom feeding opportunists. Pair that with the immoral financial industry and you had the makings of the .com crash.

Fast forward to 2007, when the average person goes online to read today's latest and greatest news from anonymous posters, and print magazines like Computer Shopper have shriveled down to a few sheets of irrelevant paper. There is so much misinformation floating around it's ridiculous. Most "news" sites are nothing more than online parrots that simply reprint the press releases of their paid banner ad sponsors, or worse, simply allow any unverified piece of information proliferate. You may think there is no harm if the odd piece of false information is floating around. After all, people should be smart enough to see bad data when they see it. In the case of the Emaculation example with the Gemulator beta, people can easily download the themselves and run their own benchmarks.

But what happens when the bad information comes from so called "experts", from trusted web sites or individuals, and unlike obsessive fact checkers me, they ask the public to just believe the information without any credible backing data? 60 Minutes ran a great piece last night on "Curve Ball" (http://www.cbsnews.com/stories/2007/11/01/60minutes/main3440577.shtml), a stunning revelation that the entire Iraq invasion and Colin Powell's presentation to the United Nations in 2003 was based upon a blatant lie that nobody bothered to verify. The news media and "journalists" such as Bill O'Reilly at Fox News fueled the flames of war by claiming that they had seen this "evidence" and that it was indisputable. Clearly Bill had not checked to see that anyone had verified the facts. The lies of one person can affect a great many people.

Mac OS X 10.5 "Leopard" Firewall Fiasco

No sooner had much of the world gotten Leopard into their hands than this alarmist posting from a Mr. Schmidt appeared on the site of Heise Security: http://www.heise-security.co.uk/articles/98120

I'm quite disappointed that this Mr. Schmidt would choose to pull such a media stunt, which is basically to make an empty claim that Mac OS X Leopard may expose security holes due to the fact that the firewall allows inbound connections by default. If Mr. Schmidt was truly concerned about the security of Mac users, he would have realized months ago by running the beta releases that this has been the behaviour in Leopard and sounded the alarm then. I'm always amazed how people have no shame to wait until the exact day that some product gets released to sound the alarm about something that they likely knew well in advance. Other than show that Mac OS was running as it intended, did Mr. Schmidt do any further research to actually show that there is a problem? Not that I can tell.

Mr. Schmidt has apparently been clued out to the fact that beta releases of Mac OS X Leopard have been available since at least August 2006 when the WWDC 2006 was made available to Macintosh developers. Mr. Schmidt apparently has also been too busy to read this NO EXECUTE! blog and does not share my views on how to attack computer security issues. If he had, he might not have put his foot in his mouth the way he did in his posting. As I pointed out six years ago this week in this posting on Darek's Secrets (http://www.emulators.com/secrets.htm#VirusMagnet) I generally do not bother to install anti-virus software nor do I recommend to most people that they go waste their money on virus related products such as Symantec this or McAfee that. When I install Windows Vista one of the first things I do is turn off that idiotic Windows Defender service. I do so for the same reasons that were true in 2001 - anti-virus software is only effective against known attacks.

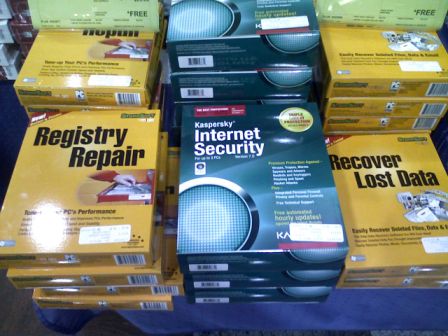

As this blog eloquently explains (http://www.zdnet.com.au/blogs/securifythis/soa/Why-popular-antivirus-apps-do-not-work-/0,139033343,139264249,00.htm) anyone writing malware is first going to check his new creation against common products from Symantec and McAfee and Microsoft. Only when he derives something that fools the existing checkers does he release his new exploit. Therefore, almost by definition it is a waste of your money to buy commonly advertised and over-hyped security products. Is it any wonder that a few days ago when I visited the Fry's Electronics in Renton, WA, the first thing I saw as I walked in the door was a rather shoddy "free software" table featuring, what else, those very same and rather useless security products? "Free" of course being in quotes and being printed with an asterisks on their sign, as this is just one of those annoying rebate scams. My new AT&T Blackjack cell phone that I took these photos with? I am still waiting since July for my $100 rebate (which at this point I doubt will arrive). I hate rebates! It is saddening to see security being relegated to these toy products which at best block poorly written malware, and at worst are a waste of your time, money, and your computer's RAM.

Back to firewalls... at best, enabling a firewall may protect your computer from some network based attacks, provided that you do not actually need those network services, say, file sharing. At worst, the firewall may give you a false sense of security and may even encourage users to act negligently when online specifically because of this false sense of security. This is similar to the false sense of security that airport screening checkpoints serve to provide, which do nothing to stop the way that most people die in airplane accidents - poor mechanical maintenance and human error.

Scanning the web I found this (http://jaraco.livejournal.com/59254.html) excellent blog posting by Jason Coombs which explains better than I can why firewalls may even do more harm than good - they tend to get in the way when you actually need the network service that's being blocked. A firewall masks a problem that may exist in a network service, but that is of little consequence in identifying and fixing the root cause of the problem. And, since a firewall is itself using operating system calls, it does not protect against fundamental bugs in the lower-level code such as the network device device driver itself. Malformed packet bugs anyone?

Not convinced by these rational logical arguments, the well known news site eWeek.com chose to jump on Mr. Schmidt's alarmist claims, running an online article entitled "Leopard Has More Holes than Spots" (http://ct.enews.eweek.com/rd/cts?d=186-8550-54-798-548400-824339-0-0-0-1). Other than being a pun, what does that title even mean? Has NOBODY in the media spent the past year or so beta testing Leopard? If they are so convinced that the firewall settings are bad, could somebody have said something instead of waiting for it to make a conveniently timed headline? eWeek.com should be utterly ashamed of itself for running that piece.

Turning the firewall on or off is a setting. It's an on/off switch that is set to some default value by the setup program that the user is free to modify. It is not a bug of the operating system. It is not a code defect. This is what all of these so called experts do not even distinguish, between a policy such as setting the firewall to some setting by default, and an actual bug in the code that makes the code behave contrary to what it is suppose to do. Remember that prior to the release of Windows XP Service Pack 2 in the summer of 2004, Windows didn't even come with a firewall that most users could easily configure. Windows XP as it first shipped was a wide open barn door. Windows 98 before was a security nightmare. All versions of XP set the default Administrator password to blank, which means it is trivial to log in with full administrator privileges to a Windows XP machine by default. Where we these experts then to denounce the security holes in XP? Or were their web sites too busy hosting Microsoft-funded banner ads? I really fail to understand the viciousness that people are attacking Leopard with without any credible evidence that there are code defects in the networking services.

Eyeballs Versus Tools

A serious crime against consumers in my opinion is when the irresponsible attitudes of online web sites spill back to the print media. Just last week, the October 31 2007 issue of The Wall Street Journal published another questionable piece by Lee Gomes. He is the same guy I singled out in the Sep 3 posting of NO EXECUTE! for running the rather irresponsible piece in the August 29 2007 edition where he practically laughed off security concerns of surfing the web. This past week, in a piece on page B1 titled "Rage, Report, Reboot, And, Finally, Accept Another System Crash", Mr. Gomes describes how he is simply resigned to the fact that his computer will crash regularly, so his solution is to just back up his files more often.

First telling your readers that it's ok to accept any old email attachment and then telling them to except regular crashes is just downright irresponsible and hollow. There is nothing constructive is article. Mr. Gomes would better serve his reader to call in sick and stop writing. Mr. Gomes fuels the flames of misinformation by finishing his piece with a quote from a computer teacher which advocates that Microsoft should allow more people to look at the source code to its operating system as "a million eyes beat two dozen any time". Such a simplistic mindset is naivety, squared.

More eyeballs does not equate to fewer bugs. Linux has been open source from day one and it too still contains security holes and bugs even after more than 15 years of "many eyeballs" development. Mac OS X, which is largely built upon BSD Unix and various open source components, same thing. The eyeballs looking over open source code may not understand the code they are looking at, may not spot the bug, and worst, may belong to someone who then write an exploit. I am not against open source, but the argument that open source code is less buggy by virtue of more eyeballs is just plain unproven. I challenge anyone to point me at the data to back up that claim.

The bottom line is that software bugs and security exploits exist in all types of software at all levels of software stack, right on down to the low level drivers and operating system code that the security products themselves rely on. Security and reliability needs to be addressed at the very fundamental level of code generation and code execution. This means that developers need tools to help them identify and fix software bugs before they release their software to the public. It also means that computers must execute code assuming that the code is nevertheless buggy and malicious, even the operating system code itself. The key to security and reliability is testing, verification of new code, and giving developers the tools to make those tasks to perform each and every day, even each time they compile their code.

Just as nobody in the heterosexual community took HIV seriously in the 1980's, and thus allowed a containable infection to turn into a preventable worldwide epidemic that is now ironically a profitable cash cow for the drug industry, the computer industry has not taken security and reliability seriously. Instead, the industry is marketing false hope and the fear of security exploits as a mechanism to sell new upgrades of software (and even hardware, think "NX" bit) and worthless security products that end up having to be given away at Fry's Electronics. It is a shameful exploitation of the consumer.

Recall how at one time Norton Anti-Virus could be disabled with a single Windows registry change: (http://www.securiteam.com/windowsntfocus/5GP0C2A4UO.html). Much as a firewall relies on the network driver being secure, an anti-virus utility relies on the operating system being secure and is rather useless when one can simply ask the operating system to disable it! Security checking cannot exist in the same sandbox as the malicious code it is protecting against. It needs to be done one level of execution lower. But how can you go lower than native machine code being executed directly by the microprocessor?

As I have been explaining for many weeks now, this is the fatal flaw of most security products and of the current wave of hardware virtualization based virtual machines. Only a binary translation based virtual machine (a.k.a. a virtual execution runtime) can provide the mechanism to indirect the execution of code that one extra level. A virtual execution runtime can give developers the needed ability to run their code in a sandbox and analyze the execution of their code. A virtual execution runtime can also give end users security protection by detecting code sequences that may trigger CPU errata, by doing software-based buffer overrun checks, and by performing the checks in a way that is not "visible" to the code being virtualized. This is a fundamental property of the buffer overrun checks in something like Java or .NET. Now it just needs to be extended to all code, native and managed.

In summary, please stop falling for the rhetoric from these so called "security experts" that are just trying to sell you band-aid products that might throw a few speed bumps down at malware but don't actually fix anything. Companies such as Microsoft, McAfee, and Symantec should stop misleading consumers with their "free" products and focus on producing developer-oriented tools to detect and stop buggy code from shipping to the public in the first place. Between the irresponsible journalism of eWeek, The Wall Street Journal, and the rush of so called security experts making unsubstantiated claims against Leopard, consumers are being misinformed and are rightfully confused. A lot of different people should be ashamed of themselves.

I'm going to keep it short and sweet this week and stop here. The idiotic events of this past week side tracked me from my planned continuation of the discussion on virtual machine performance, which I will postpone until next Monday. I apologize for the change of topic today and trust that you will keep sending your comments and ideas to me at darek@emulators.com.